Understanding patient needs throughout clinical trial participation

Senior Product Designer, IQVIA | 2022

User Research, Usability Testing, Journey Mapping, Persona Development

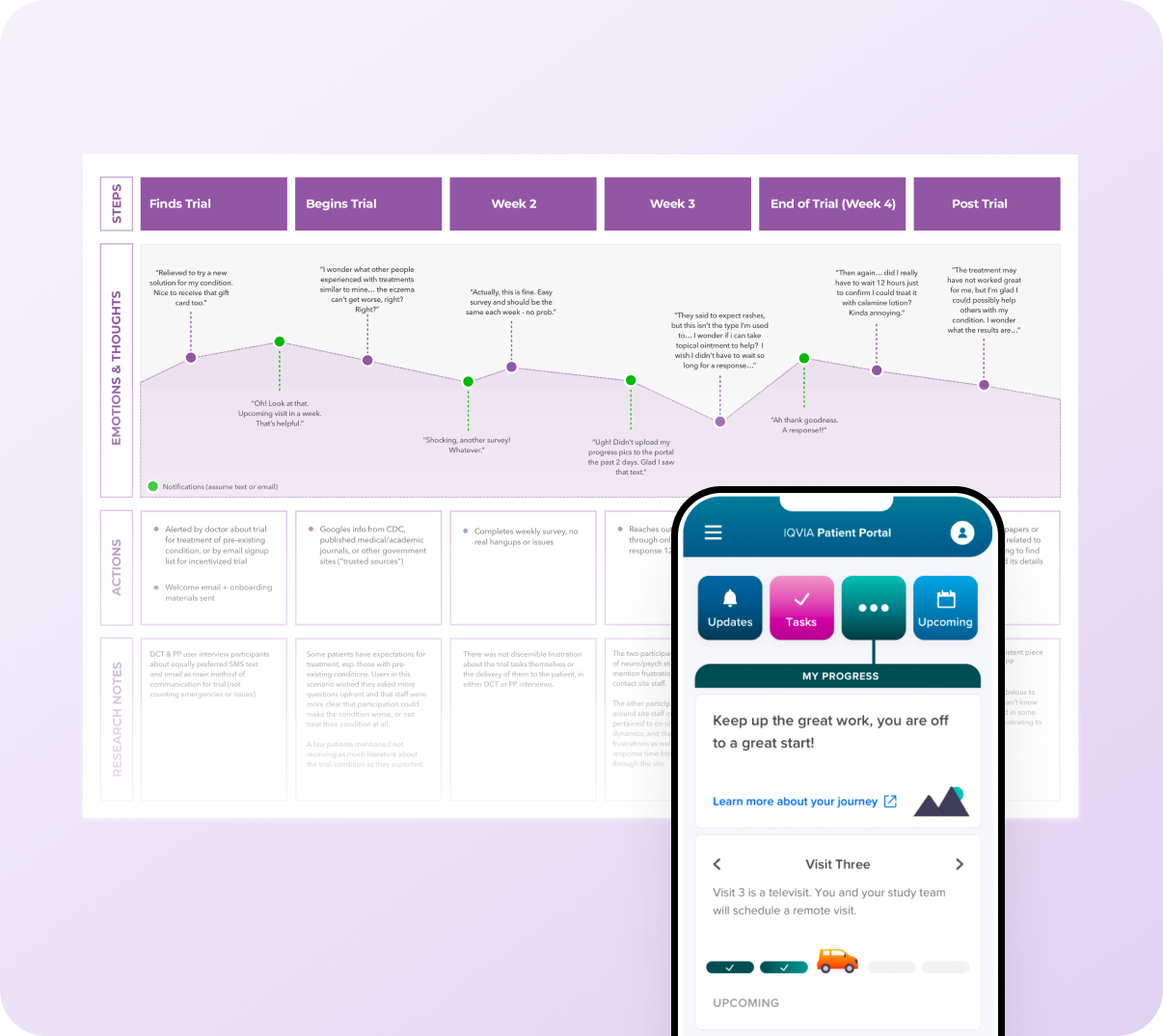

IQVIA's Patient Portal serves over 210,000 clinical trial participants across multiple therapeutic areas. The team was planning a UI redesign, but lacked foundational research to guide priorities.

The risk: Without understanding what information and tasks mattered most to patients throughout their trial journey, the product team could optimize the wrong things, wasting budget on changes that didn't improve the patient experience.

The research question:

What information and tasks are most valuable to clinical trial participants during their trial journey, and what usability issues exist in the current experience?

My role: Design and execute end-to-end research—from study protocol through synthesis and cross-team presentation—delivering validated insights to inform the redesign strategy.

Challenge

Three constraints shaped the research approach:

Limited patient access: Government regulations restricted our ability to directly track patient demographics and behaviors, making segmentation difficult.

Redesign timeline pressure: The design team needed actionable insights quickly to inform the UI redesign roadmap.

Cross-product coordination: Multiple product teams served similar patient populations but operated independently, creating opportunities for shared learnings.

My role: Design and execute end-to-end research—from study protocol through synthesis and cross-team presentation—delivering validated insights to inform the redesign.

Research Approach

I designed a mixed-methods study combining generative interviews with usability evaluation.

Primary objective:

Uncover and/or confirm suspected usability flaws in the current Patient Portal experience and identify the information/tasks that are most valuable to clinical trial participants, in order to inform the UI redesign effort.

Secondary objectives:

Understand patient experiences, needs, and attitudes as they navigate trial participation

Evaluate perceived value of vitals-tracking features under discussion

Establish a usability baseline using System Usability Scale (SUS)

Methods & Participants

User interviews (10 participants) - 60-minute moderated sessions

A/B concept testing (10 participants) - Evaluating proposed information hierarchy

Usability testing (5 participants) - Task-based evaluation with 11 scenarios + SUS scoring

Participant criteria balanced digital literacy with diversity in trial experience, ensuring findings applied broadly across IQVIA's 210,000+ patient population.

Clinical trial participation in last 12 months

25+ years of age

Average web expertise

Located in United States

Mix of mobile (6) and desktop (4) experience testers

7 testers had previous experience with clinical trial apps/websites

Average age: 41

Test Design

Unmoderated usability testing of the live Patient Portal in its production environment allowed participants to complete realistic tasks in their natural context.

Sample testing tasks included:

"Looking at this page, what milestone(s) have you completed in your clinical trial journey?"

"Find the Vitals measurement results from your latest visit"

"Find more information about the clinical trial you are participating in. Find the 'Study Type' of the clinical trial."

"Locate your list of study visits and open the visit details for Visit 3"

"Create a new task reminding yourself to take your study medication. You would like to be reminded next Monday at 8am."

"Mark a task as complete"

"Send a message to the Study Team letting them know that you need to reschedule your next visit"

"Find your account settings and update your phone number"

Qualitative feedback questions:

What information on the home page do they find valuable?

What information on the home page do they find least valuable?

Recommendations to improve the experience

System Usability Scale (SUS):

10 standard usability questions on a Likert scale

Responses tracked over time to measure progress of the app's usability

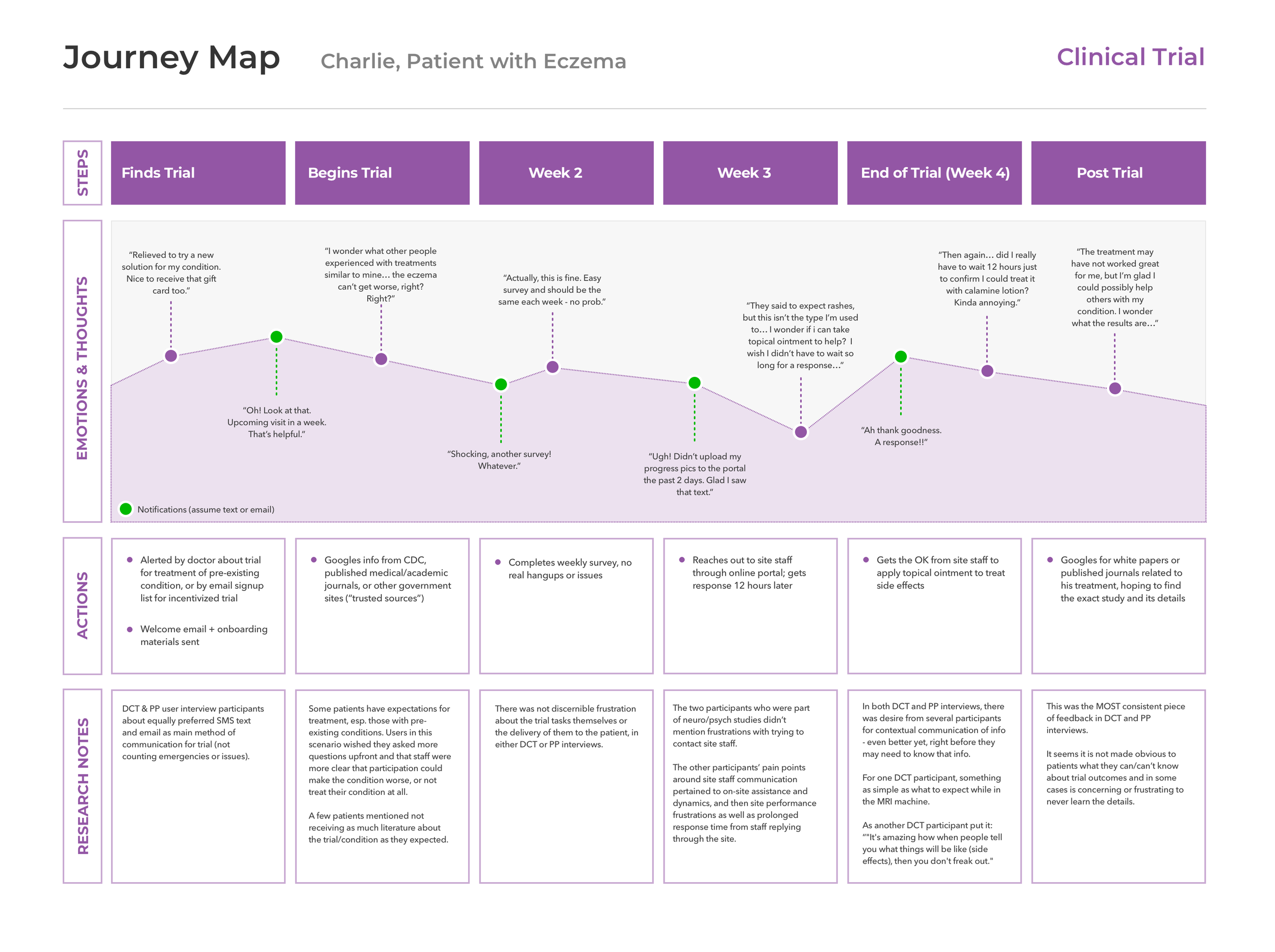

Synthesis

Affinity mapping became my primary synthesis method to organize and identify patterns across all research activities:

20 hours of interview recordings → 400+ discrete observations

Organized into 12 thematic clusters (motivations, pain points, preferences, behaviors)

Cross-referenced with task completion data and SUS scores

Created 3 patient personas and 1 comprehensive journey map from patterns

This process revealed insights that weren't obvious in individual sessions—for instance, notification overload affected patients regardless of trial type or age.

The affinity map served dual purposes:

Creating research artifacts (personas, journey maps) grounded in real user feedback

Providing reference material for product teams to revisit specific findings without re-reading transcripts

Key Findings

Finding 1: Information Transparency Gap

71% of participants expressed anxiety about 'what happens next' at trial visits

Why it matters: This led to missed visits, last-minute cancellations, and increased burden on clinical staff

Design implication: Prioritize visit preparation content and proactive timeline communication

Finding 2: Demand for Notification Control

Participants described checking their phones 'constantly' due to trial app notifications, even during work hours

Why it matters: Notification fatigue was the #1 cited reason for disengaging from the portal

Design implication: Introduce granular controls for notification timing, importance levels, and channels

Finding 3: Content Organization Over Visual Design

SUS score of 83.5 (top 10%) indicated usability mechanics weren't the problem

Why it matters: Freed design team to focus budget on IA improvements rather than interaction redesigns

Design implication: Deprioritize visual refresh; focus on content hierarchy and task prioritization

Usability Results

System Usability Score: 83.5 out of 100

'A' Rating

Top 10% when normalized against study of 500 SUS scores (industry average: 68)

Most Valuable Home Page Content:

Task List

Information about the Study

Information about their Study Site

Least Valuable Home Page Content:

Generic helpful links and resources that do not pertain to their specific study

Top Recommendations:

Reorganize content and its hierarchy

Improve system response times

Detailed SUS Responses showed high scores across all metrics:

"I could use the website without having to learn anything new" - 4.8 mean

"I think I could use the website without the support of a technical person" - 4.7 mean

"I thought there was a lot of consistency in the website" - 4.5 mean

Key Takeaways from Testing:

Most common recommendations for improvement were related to content organization and hierarchy rather than usability or styling

High System Usability Score implies that usability is not a deterrent to frequent logins

High agreement exists on Most Valuable and Least Valuable Home Page content

Less impactful usability shortcomings still exist and should still be addressed in redesign:

Task with highest perceived difficulty: "Send a Message to Study Team"

Small number of testers could not find Account settings

Icons were not always recognized or understood

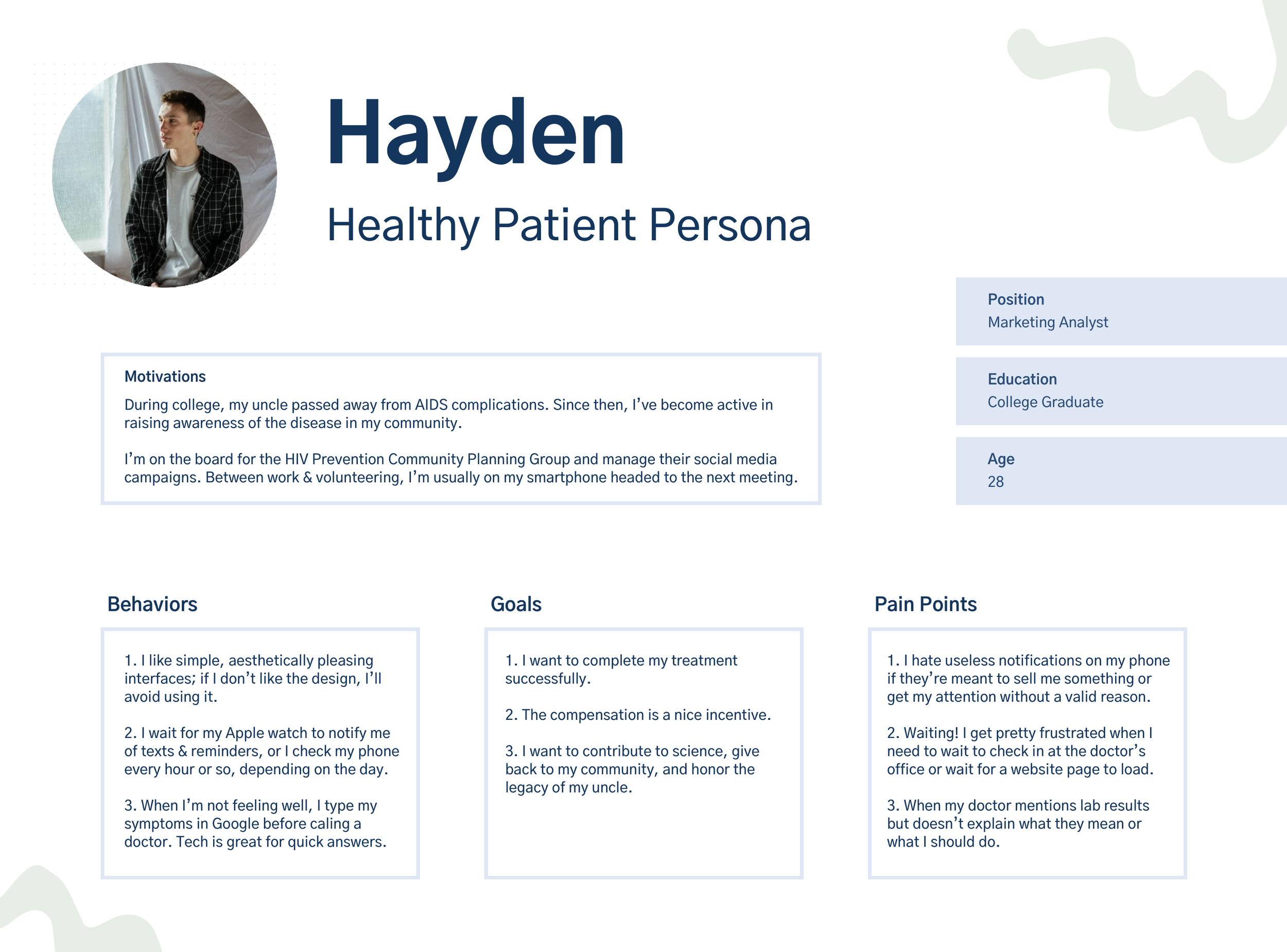

Research Artifacts

To help product teams internalize findings, I created a persona reflecting common sentiments from user interviews. This persona tied directly back to affinity map themes, ensuring it represented real patterns rather than assumptions.

Personas are a useful tool to convey who you are designing for in the broadest sense to align the product vision. This “healthy patient” persona reflects common sentiments I heard in user interviews and ties back to affinity map themes.

Journey Map

I created a high-level journey map documenting the patient experience across trial phases: pre-trial, enrollment, active participation (Weeks 2-3, End of Trial Week 4), and post-trial.

Each journey phase included:

Thoughts & emotions (emotional curve showing patient sentiment)

Actions (specific behaviors patients described at each phase)

Research notes (connecting each step to specific findings for product team reference)

The journey map served two purposes:

Helping product teams see how each design decision fits into the larger patient experience

Documenting where insights were rooted in research findings

Future segmentation work: I had scoped plans to create journey maps for each patient population segment (pediatric, elderly, patients with caregivers) before transitioning to a new project in 2022. Government regulations limited direct demographic tracking, but we identified underutilized secondary research that could inform segmented maps and validate assumptions through targeted follow-up studies.

Recommendations

Based on research findings, I provided three strategic next steps to the product team:

Next Step 1: Validate assumptions with broader testing

Consider surveying broader patient population to confirm certain assumptions about technology use & preferences by age group, followed up by usability testing of a low-to-mid fidelity mobile prototype.

Next Step 2: Segment core user groups

Segment core user groups based on existing data and further generative research (what factors, such as age and motivation for participating, contribute to certain needs/attitudes/behaviors?)

Next Step 3: Conduct longitudinal studies

Consider opportunities for longitudinal studies in order to better understand information-seeking, communication with site staff, and trial/task notifications in context of trial alongside participants' daily life.

Impact

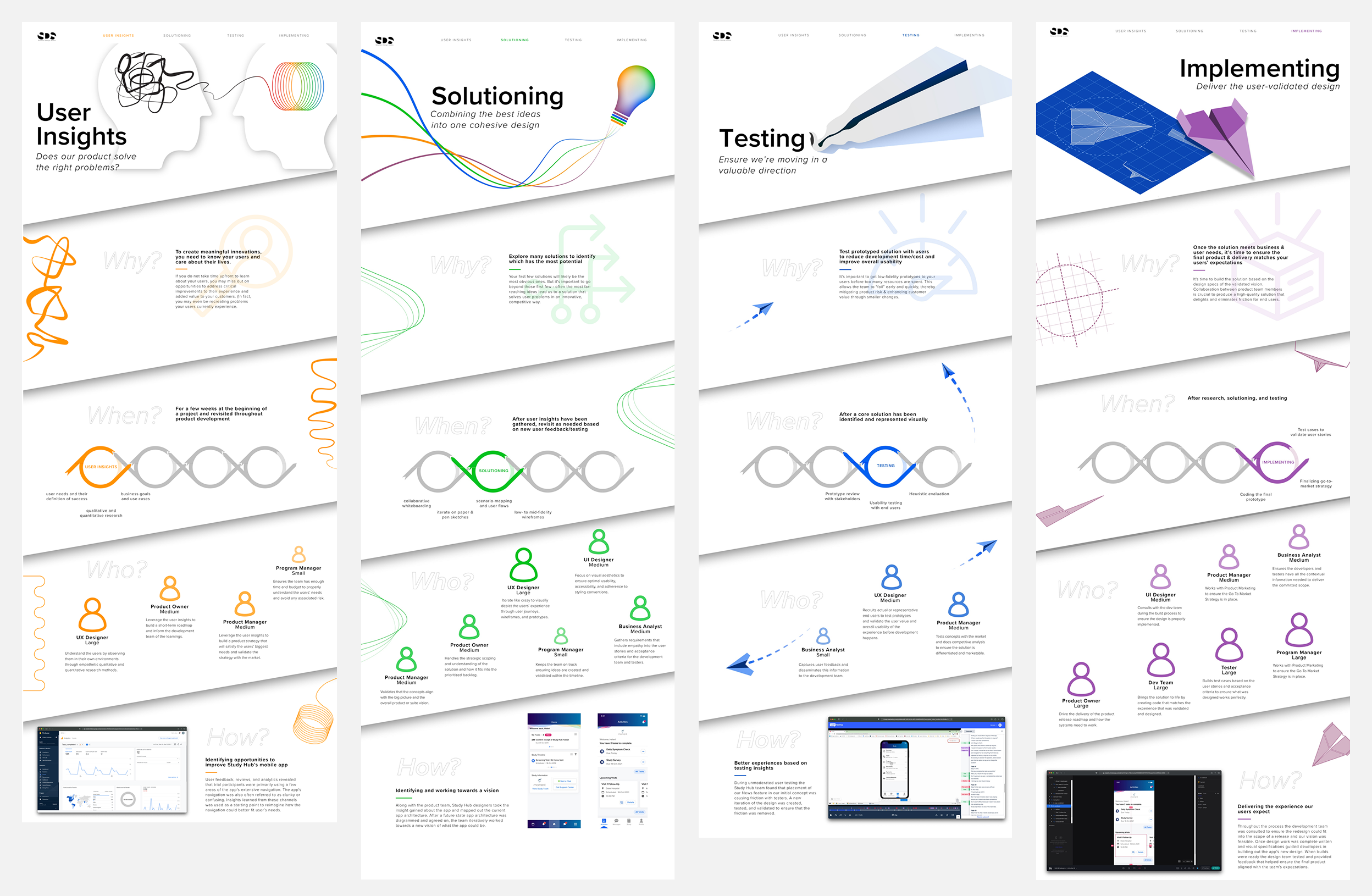

Established rapid research template:

Demonstrated that meaningful insights don't require months-long studies. The 2-week research sprint model I developed was adopted by other designers across IQVIA, shifting team culture from "we can't afford research" to "we can't afford not to research."

Created cross-product playbook:

Synthesized findings into a User Insights Playbook used by 5+ patient-facing product teams serving 210,000+ participants across the platform. This eliminated redundant research and created shared understanding of patient needs.

Built segmentation foundation:

Established baseline patient insights that enabled IQVIA to explore segmented populations (pediatric, elderly, patients with caregivers) through targeted follow-up studies.

Redirected redesign strategy:

The 83.5 SUS score (top 10% industry-wide) revealed that usability mechanics weren't the problem—content hierarchy was. This finding saved significant development budget by focusing the team on information architecture rather than interaction redesigns or visual styling.

Accelerated design-to-development:

Fast research turnaround (2 weeks per study) meant design teammates could incorporate validated feedback into prototypes immediately, reducing iteration cycles and expediting usability testing.

Shipped validated components:

Research-validated design components were incorporated into both desktop and mobile applications in Q2-Q3 2022, ensuring the redesign addressed real patient needs rather than assumptions.

What I Learned

Research timing matters:

In agile, your "down time" between release cycles is a perfect opportunity to switch gears and deeply focus on research. Rather than always being reactive to sprint deadlines, strategic research during lulls creates proactive insights.

User research is increasingly critical:

As world events change people's use & attitudes towards technology (this research occurred during COVID-19), user research is even more critical to product strategy & success. Assumptions decay quickly.

Low UXR maturity ≠ low impact:

On product teams with low UXR maturity, any amount of insight you can give them is appreciated—even if just a gut-check. Small, fast studies can build momentum for larger research initiatives.

UX Group Lead, IQVIA

“I always appreciated Moira's unshakable and level-headed tenacity, process orientation, and fierce devotion to the user.”

What I’d do differently

Expand the usability testing task set:

While 11 tasks provided good coverage, I would have added scenarios around notification management given how prominently that theme emerged in interviews.

Recruit more diverse demographics:

The average age of 41 and requirement for "average web expertise" may have skewed toward digitally comfortable users. I'd push to include participants with lower digital literacy to understand their barriers.

Build in follow-up mechanisms:

The three recommended next steps were solid, but I left before implementing them. I'd now create more detailed handoff documentation and identify stakeholder champions to maintain research momentum.

Create a research repository:

Affinity maps and artifacts lived in project files but weren't systematically cataloged. A centralized repository would have made findings more discoverable for future product teams.

Test the redesign concepts iteratively:

Given the high SUS score, I would have advocated for testing IA changes in isolation before committing to a full redesign, reducing risk of accidentally breaking what was already working.