Enabling faster exploration and decision-making for clinical trials

Senior Product Designer, IQVIA | 2020–2022

UX Research, Information Architecture, Interaction Design, Prototyping, Usability Testing

UI examples have been modified for confidentiality.

Adaptive clinical trials were becoming the industry norm, but the tools used to design them were slow, fragmented, and difficult to interpret. Biostatistics teams were managing high turnover, growing customer demand, and legacy programs requiring specialized expertise & manual effort.

Vision

IQVIA Biostatistics leadership envisioned Trial Designer as "the Facebook of clinical trials meets a bleeding-edge calculator"—a platform that would make sophisticated statistical modeling collaborative, accessible, and scalable.

Challenge

Biostatisticians and medical reviewers had no integrated way to design, compare, or collaborate on trial protocols. Comparison happened via email and manual lookup across 2-3 different systems—a slow, error-prone process. R-code modeling, critical for advanced statistical work, was intimidating for novice users.

These constraints had measurable business impact:

Slow turnaround times for trial designs

Increased dependency on limited statistical experts

Inability to scale adaptive-design consulting

No way to compare designs or collaborate asynchronously within the tool

Growing pressure from clients for faster insights

Opportunity

This was a chance to create a modern, integrated platform—a flexible interface that would let biostatisticians rapidly explore design options, interpret outputs, compare results side-by-side, collaborate asynchronously through comments and ratings, and deliver polished recommendations to clients.

Projected cost savings + external licensing revenue: ~$300M annually.

Analysis

I joined three months after kickoff, inheriting a workshop, a competitive analysis, and early scoped MVP features. Early biostatistician involvement was limited—they were busy supporting COVID-19 trials—so I relied on:

distilled notes from the research workshop

follow-up interviews with five internal adaptive-design experts

close collaboration with the Product Owner, BAs, and Solution Architect

heuristics from existing tools and statistical workflows

Research approach

5 in-depth interviews with adaptive design experts

A/B usability testing with 13 biostatisticians on input form patterns

Observational studies of how biostatisticians currently compared designs across legacy tools and email

Synthesis of workshop findings and workflow observations

These insights directly informed the IA model, input form redesign, comparison interface structure, and collaboration features.

Early wireframes my UX teammate and I assembled to understand current workflows across separate tools

From this work, three core problems emerged:

High cognitive load: Inputs were complex (20–30 fields per design), with correlations that weren’t obvious to novice statisticians.

Fragmented workflow: Statisticians bounced between tools: R scripts, notebooks, legacy software, emails, and spreadsheets.

Slow decision-making: Comparing multiple design types required manually exporting outputs and stitching them together across 2-3 separate systems—there was no integrated comparison capability.

This gave us a clear north star: Reduce friction at every step, from input to exploration to comparison to collaboration.

Solutions

End-to-end workflow and IA

My first deliverable was a simplified product workflow and lightweight IA covering:

project creation

session management

design input

output generation

comparison + collaboration (net-new capabilities)

This model became the backbone of Sprint 0 and set expectations for the MVP.

A clear, end-to-end view of how projects move from setup to output—used to align the team on what the MVP had to support.

Inputs form: Solving for complexity

Each adaptive design type requires 20–30 inputs that feed R-powered computations. Trial Designer needed to serve both expert biostatisticians (comfortable writing R-code) and novices (who needed structured guidance)—embodying the "bleeding-edge calculator" part of the vision.

Our initial approach relied on traditional tooltip icons—hover to reveal definitions—but user feedback showed this was slow, visually noisy, and hard for novices to interpret in context.

I introduced a new pattern that grouped correlated fields and surfaced guidance inline—right where users needed it, not in a separate overlay.

This reduced distraction, created a clearer mental model for novice users, and grew trust. By abstracting R-code complexity into structured input fields, the form made advanced statistical modeling accessible without sacrificing sophistication.

I ran an A/B test with 13 statisticians: 11 of those preferred the contextual pattern. The pattern was later adopted into IQVIA's internal design system used by 80+ designers.

Related inputs were spread across the page with no real hierarchy. Key definitions lived inside hover-only tooltips, which slowed people down and made the form harder to interpret—especially for less experienced users.

I reorganized the form into meaningful sections and moved explanations inline. This reduced scanning, improved comprehension, and tested better with internal statisticians—11 of 13 preferred the contextual pattern.

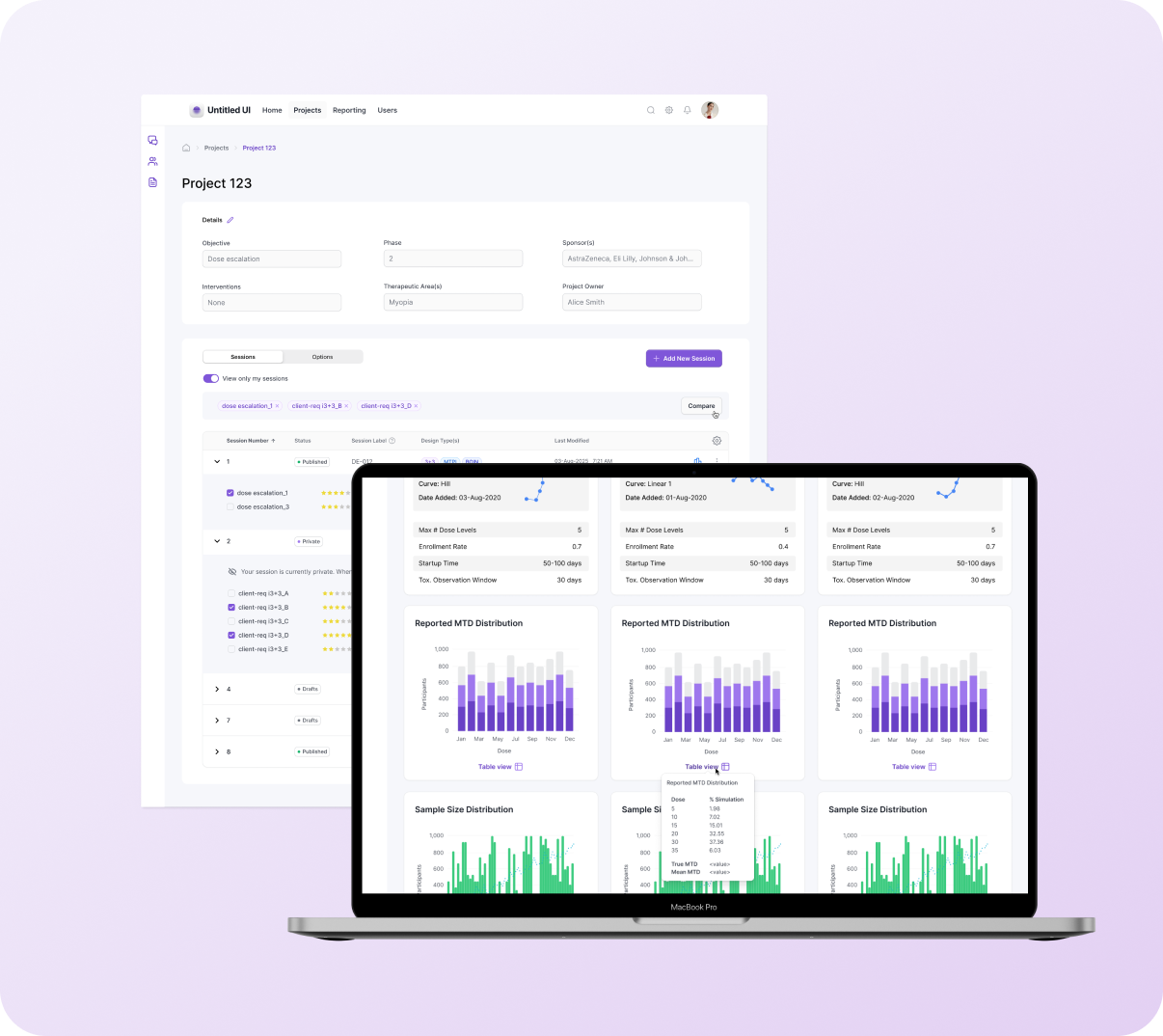

Projects page: Enabling comparison & collaboration

The project homepage organizes sessions and design options, which can number in the hundreds. Before this work, biostatisticians had no way to compare designs within Trial Designer—they relied on email threads and manual lookup across 2-3 legacy systems.

Early exploration showed:

the nested accordion structure wouldn't scale for hundreds of results

users couldn't quickly identify which results to compare

the "Compare" action wasn't discoverable

important attributes were hidden in nested accordions

users could only compare results within the same session—cross-session comparison meant working across multiple tabs and external systems

Before (left): Nested options obscured identifying details, and the table lacked the structure to scale for hundreds more options. After (right): Introduced a flat table with accordion organization to make selection + comparison easier.

Outputs: Results comparison

Biostatisticians and medical reviewers had no integrated way to compare trial designs within Trial Designer. Comparison happened via email and manual lookup across 2-3 different systems—a slow, error-prone process that made it difficult to evaluate designs side-by-side, maintain shared context, or track decisions in one place.

Outputs contain statistical graphs, confidence plots, and tabular metrics—voluminous information that reviewers rely on to decide which design should be recommended to clients. Early versions displayed each design in a separate vertical column, requiring reviewers to scroll down through one design, then scroll through the next to find corresponding metrics. Table data lived in separate sections, and without filtering by session or design type, users faced an overwhelming flat list of results—making it difficult to focus on relevant comparisons.

Solutions:

Modular card structure with shared vertical alignment: reorganized content so that equivalent information appears at the same position across all designs, enabling direct side-by-side comparison

Hierarchical organization: supports the Project > Session > Result structure, enabling users to compare within or across sessions as needed

Retail-style comparison model: compare multiple designs (up to 3 at a time) horizontally

Integrated table view as hover tooltip: consolidated tabular data beneath each chart as an on-demand overlay, improving accessibility and keeping visualizations unobstructed

"Default" visual-first view added in R2 (validated through beta testing)

Peer ratings and collaborative evaluation: allow project teams to upvote stronger designs asynchronously, reducing review cycles

Post-handoff recommendations: user-controlled column ordering, filtering for large data sets, additional sort options

These decisions made the page more scannable, more collaborative, and easier to interpret during client-facing preparation.

The prototype below demonstrates selecting trial sessions for comparison: users expand accordions, select designs across sessions, and navigate to side-by-side Results view.

Partnerships

My role required translating domain complexity into a usable product—quickly. This meant:

Co-designing with biostatisticians: Weekly working sessions to validate formulas, field groupings, and data labels.

Partnering closely with developers: The R-code integration was substantial, so I worked directly with the R engineering team to confirm assumptions and align UI behaviors with mathematical outputs.

Supporting rapid documentation + story elaboration: I frequently helped BAs break features into epics and user stories to accelerate delivery.

Facilitating decisions across SMEs: Adaptive design experts often had differing opinions; my job was to bring clarity to requirements and tradeoffs.

Outcomes

Unified tool replacing a patchwork of legacy programs, improving workflow efficiency

Asynchronous collaboration, reducing emails and speeding up design review cycles

Cleaner, more polished outputs for client presentations

Increased learning support for “novice” users through contextual guidance

Internal cost savings + new revenue potential projected at~$300M/year

Internally, the tool became a core asset for biostatisticians managing high-risk, highly regulated work—especially during COVID-era resource constraints.

Senior Delivery Architect, IQVIA

“Moira delivered an outstanding design roadmap and provided valuable insights for the app that went beyond simply generating a nice interface. Her work is timely and of highest quality.”

What I’d do differently

Introduce faceted search earlier to address the scale of design options

Prototype more rigorously with R engineers to validate edge-case states sooner

Formalize a collaborative “design review ritual” across statisticians to speed alignment

Invest earlier in progressive disclosure patterns for dense input and output pages

These would shorten the refinement cycle and strengthen long-term usability.